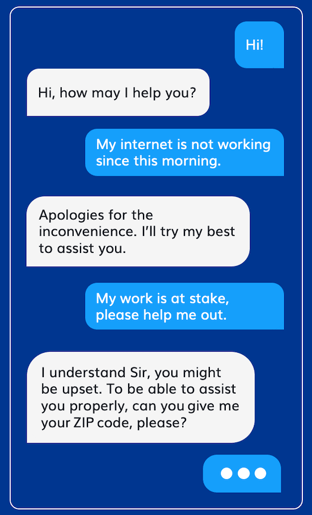

Imagine a conversation between a disappointed customer and a customer service representative:

I’m sure we've all experienced these but what is obvious here? The answer is empathy from a human agent. That took a mere two responses to figure out, right? This ability to converse with others through emotions is ingrained in human language. We detect the emotional tone behind conversations by gauging the polarity and magnitude of the conversations. This enables us to respond with emotional intelligence, empathy and deliver a flawless customer experience by connecting with the other person.

Polarity indicates whether the sentiments are positive, neutral, or negative and magnitude expresses how strong the sentiments exhibited by the customer are.

So, the question arises: Humans understand emotions — does AI?

To delve into this, let's explore what sentiment analysis is all about.

What is Sentiment Analysis?

It is the branch of machine learning that tries to decode the emotional tone of conversations powered by advanced language algorithms. It works by sensing and quantifying the positive, neutral, and negative feelings within our conversations.

But what makes sentiment analysis so valuable? Human communication is more than an exchange of words or neutral ideas. It includes the intricate expression of feelings, way beyond simple semantics. Sentiment analysis provides insights into the actual mood behind the text and goes deep into complex statements. In a nutshell, it models social interaction which creates value that can be leveraged.

Some of the leading benefits of sentiment analysis:

1. Spotting Key Emotional Triggers

Emotional triggers drive our daily decisions. With sentiment analysis, you can better spot which conversations act as triggers that alter customer attitude. Like the all-time cliché phrase “Please wait”, often makes the customer cringe. Realizing what messages evoke certain emotions in your customers can help you better understand the impact of your words, whether in voice or text or via virtual agent.

2. Human Agent Handover

With sentiment analysis, you can train your virtual agent to identify and reciprocate the customer's mood. If the chatbot detects the customer is infuriated, irritated, or displeased, it can escalate to a human agent at the right time. This helps you to deliver solutions at the earliest moment and avoid frustrating users.

3. Improving Request Quality at Peak Hours

During a hectic day at the contact center, customer service representatives can find themselves tackling multiple customers simultaneously. Keeping track of how each customer is feeling can be a challenge for the Manager – especially during busy hours. Sentiment analysis gives you a glimpse of which chats are going smoothly, and which need further attention. Thereby, improving the support request quality.

4. Overall Sentiment for your Brand

Sentiment analysis can be used to identify the happiest customers. But the real benefit lies in recognizing negative statements about your brand, products or service which may require attention to help soothe disgruntled customers. As the saying goes, forewarned is forearmed and the better you understand customers, the better you can help them and craft a better experience.

5. Adaptive Customer Service

Humans are able to say the exact same statement with joy, sarcasm, or regret yet using the same words. But it can a hard nut to crack for bots. With sentiment analysis, it is easier for your Virtual Agents to adapt to the tone of the customer conversations and reciprocate accordingly. This makes conversational AI more natural and engaging.

By now, it is apparent that there are numerous advantages of sentiment analysis. To raise the customer experience to another level let's look at how sentiment analysis & conversational AI can transform customer experience.

Sentiment Analysis with Conversational AI

Most people, even when they know they are interacting with a Conversational AI, will convey feelings as if they were talking with another person.

As AI continues to gain traction in modern customer service, bots are becoming a vital part of the user experience. After all, the purpose is not to just provide faster answers, rather as an organization, you want to create a smoother and quicker customer experience. Therefore, the focus should be on building better Virtual Agents which understand complex and distinct human sentiments and adapts its responses accordingly. And this is possible via sentiment analysis.

Thus, a Virtual Agent with ‘’sentiment analysis’’ capabilities can provide more human-like responses by deciphering sentence structure clues. It can navigate the conversation in the right direction, capitalize on customer delight, and even let a human agent takeover at the right time.

The outcome: Conversational experiences that are more natural and engaging than ever.

The beauty of sentiment analysis is that you can analyze language in many forms, whether text or speech and review multiple channels. So if you plug Cognigy into Amazon Connect, for example, you can have it monitoring your phone calls but also voice and text in other channels whether WhatsApp, live chat or Instagram.

Check out how Cognigy.AI uses Sentiment Analysis to detect human emotions in the following video.

.png?width=60&height=60&name=AI%20Copilot%20logo%20(mega%20menu).png)

.png?width=600&height=600&name=Knowledge%20AI%20Feature%20image%20(2).png)

.jpg?width=600&height=600&name=Awards%20announcement%20(4).jpg)

.jpg?width=600&height=600&name=Awards%20announcement%20(3).jpg)