The latest version of Cognigy.AI introduces new error handling features to maximize the reliability of LLM and API operations for your AI Agents.

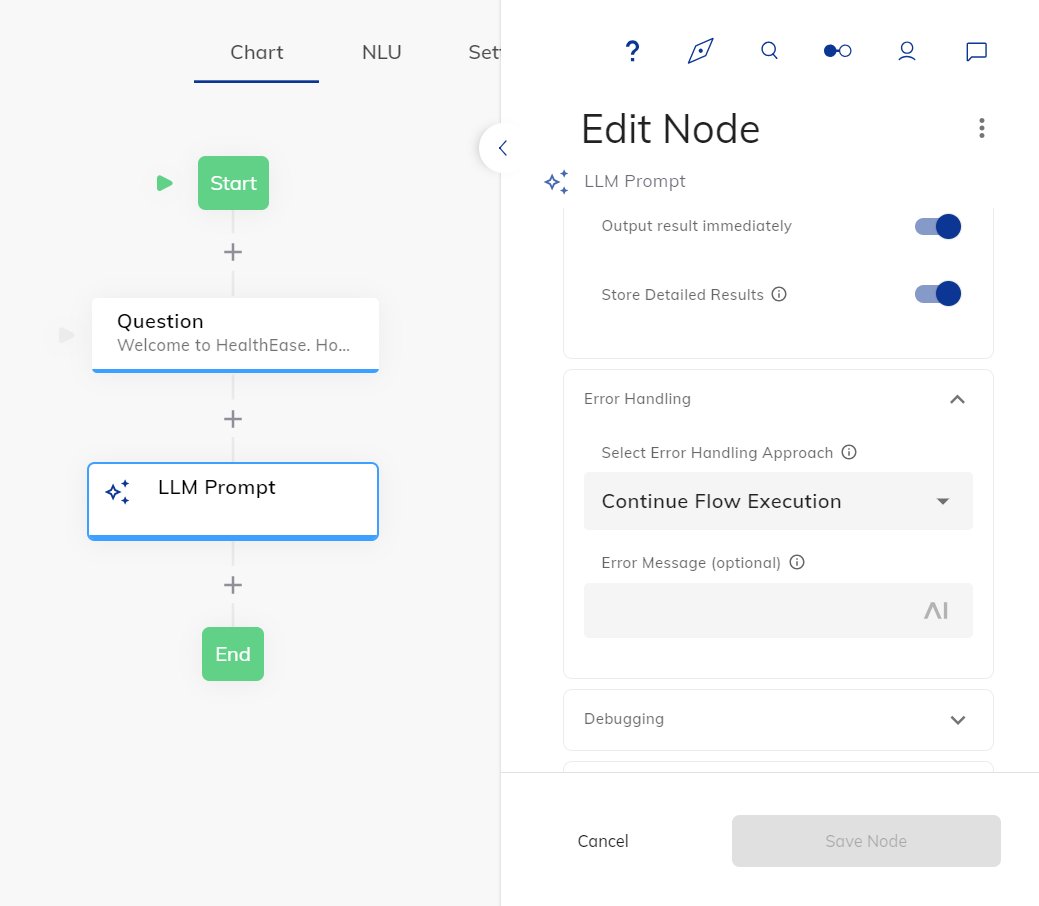

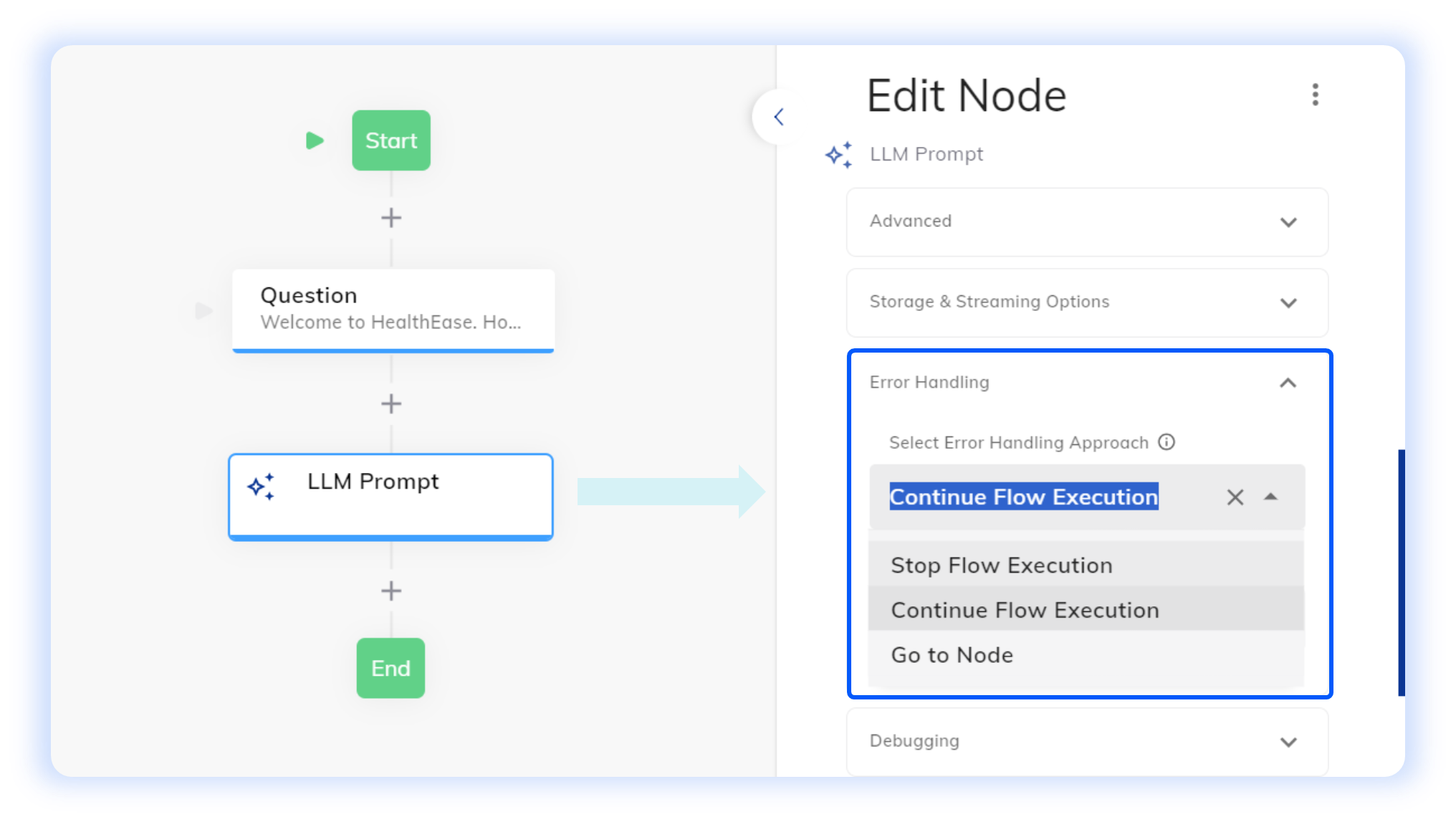

LLM Error Handling Options

Large Language Models (LLM) can encounter a variety of errors, such as requests that exceed token limits, request timeouts, or authentication issues. To ensure such issues don’t result in service disruption, the LLM Prompt Node now offers three different Error Handling options, depending on your preferences. These include:

- Stop Flow Execution – terminate the current Flow execution.

- Continue Flow Execution - allow AI Agents to bypass the error and proceed to the next steps.

- Go to Node – redirect the workflow to a specific Node in the Flow for error recovery or customized error handling.

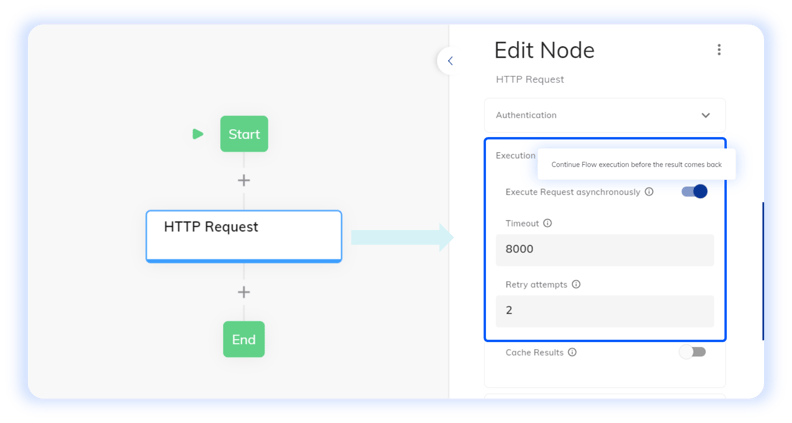

Asynchronous Request Execution for API Calls

When the Asynchronous Request Execution option is activated, the HTTP Node will execute the request without awaiting a response, avoiding delays in Flow execution. Two options are available for configuration:

- The Timeout (in milliseconds) for canceling the request.

- The number of Retry Attempts when an error occurs.

Other Improvements

Cognigy.AI

- Enabled color selection for multiple new colors in the Webchat Layout section within Webchat v3 Endpoints

- Added the option to specify other logos and titles for the message header in the Webchat Layout section within Webchat v3 Endpoints

- Implemented the option to create a Web Page source type using the Knowledge AI wizard

- Added the capability to use new Anthropic models

- Retirement of several Microsoft Azure OpenAI Models

- Added the capability to override the model version in Custom Model Options within the LLM Prompt Node. For example, you can overwrite the Legacy Anthropic model with a new one

- Improved the tooltip description for the STT Hints parameter in the Set Session Config Node

- Improved the translation of AI Copilot sentiment tiles based on the Flow execution language

- Updated Demo Webchat v2 to version 2.59.1

Cognigy Live Agent

- Implemented customization of Live Agent user session duration through the

AUTH_TOKEN_LIFESPANenvironment variable

For further information, check out our complete Release Notes here.

.png?width=60&height=60&name=AI%20Copilot%20logo%20(mega%20menu).png)

.png?width=600&height=600&name=Knowledge%20AI%20Feature%20image%20(2).png)