Cognigy.AI v4.66 lets you combine the richness of Generative AI with the structured nature of Conversational AI to flexibly handle unexpected turns in a user conversation, ensuring your AI Agent remains empathetic and business-focused.

Adeptly Navigate Non-Linear Dialogues Using LLMs

Digression handling is a critical capability for any AI Agent to manage the dynamic and unpredictable nature of human conversations.

As customers veer off the main topic or path, the AI Agent must be able to comprehend the new query introduced by the user and provide a relevant response pertinent to the new topic. Simultaneously it needs to retain the conversation context to smoothly transition back to the original path, ensuring a natural, effortless, and mission-focused interaction.

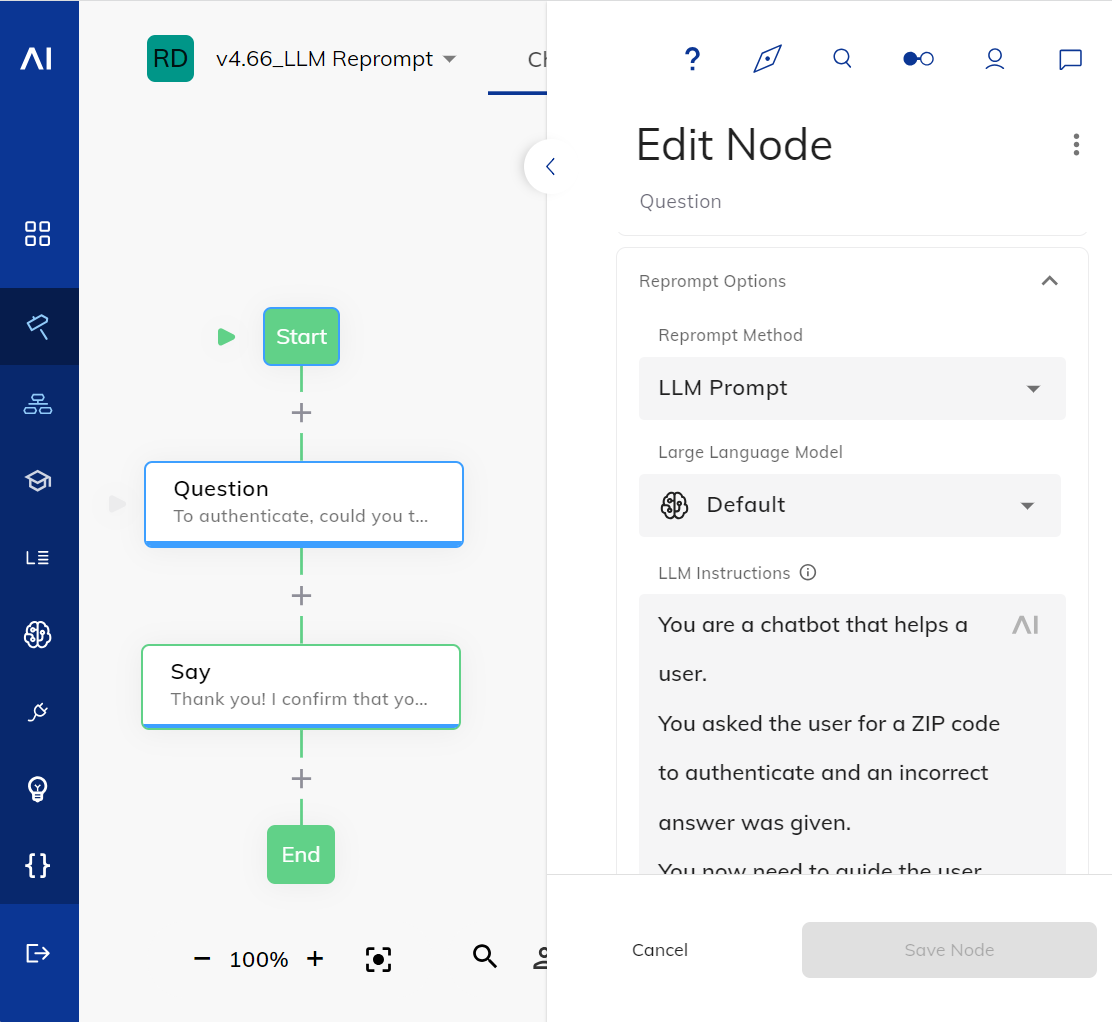

For effective digression handling, Cognigy.AI lets you automatically trigger Reprompt messages within the Question Node, if the customer’s question diverges from the original question. This feature, previously limited to simple text messages, now introduces varying approaches, including LLM integration for more sophisticated, dynamic responses.

When combined with Conversational AI, Generative AI is extremely powerful in digression handling as it enables AI Agents to embrace the non-linear nature of human dialogue and gracefully tackle any topic divergence - instead of following a rigid script. Set up is straightforward: directly within the Question Node, select your preferred Generative AI model and define your LLM instruction.

For more insights and use cases of LLM-powered digression handling, check out our latest Tech Update Webinar.

Improved Call Tracing for Voice Interactions

Our newest release also introduces notable improvements to the Call Tracing section in the Voice Gateway Self-Service Portal. These include:

- Visualized STT latency for VUX optimization: You can now view the exact time required for the speech recognition software to return the transcription. The latency happening after STT and before the next bot response indicates the time taken to receive and process the transcript for output generation. This granular breakdown now allows you to trace every step of your voice agent for advanced diagnostics and troubleshooting.

- Added support for Outbound Call Tracing: Cognigy.AI v4.66 further extends the availability of Call Tracing features to outbound calls where the AI Agent initiates the conversation.

Learn more about our Call Tracing feature here.

Other Improvements for Cognigy.AI

Cognigy Virtual Agents

- Added the Copilot: Knowledge Tile widget for AI Copilot

- Added support for

SIPREC INVITEto Voice Copilot - Replaced AgentAssistConfigs v2.0 with AICopilotConfigs in the Cognigy OpenAPI documentation

- Refined the context-aware search related tooltips of the Search Extract Output Node

- Added the button to forward the next best action content from the AI Copilot widget to the Live Agent reply section. The improvement is in the Copilot: Knowledge Tile and Copilot: Next Action Nodes

- Added the correct userId and sessionId for transcriptions to the Voice Copilot Endpoint

- Improved by exposing the full NLU object from the input in the Post NLU Transformer

Cognigy Insights

- Improved by implementing a new design of the sidebar

Cognigy Voice Gateway

- Added support for

SIPREC INVITEand the capability to choose a defaultSIPRECapplication to the Voice Gateway Self-Service Portal

For further information, check out our complete Release Notes here.

.png?width=60&height=60&name=AI%20Copilot%20logo%20(mega%20menu).png)

.png?width=600&height=600&name=Knowledge%20AI%20Feature%20image%20(2).png)