From more flexible Generative AI model selection to enhanced agent efficiency through more streamlined workflows, here’s what awaits you in Cognigy.AI v4.53.

Orchestrate Anthropic Claude within Cognigy.AI

Following the release of our new Multi-Model LLM Orchestration tool two weeks ago, we’re excited to extend our range of supported models to Anthropic’s Claude and Claude Instant.

Anthropic positions Claude as a helpful, honest, and harmless AI model. Not to mention, it has an impressive text processing capability with a 100K text window, equivalent to 75,000 words! This means you can drop long-winded documents or even a book into the prompt, and the model can ingest everything and synthesize the output in a matter of seconds. As such, Claude is well-suited for tasks like summarization and Q&A.

Two versions of Claude are available today: Claude, a state-of-the-art high-performance model, and Claude Instant, a lighter, less expensive, and much faster option.

Previously, Anthropic Claude has been available within Cognigy’s Extension Framework. You can add the Anthropic Prompt Node to your Flow in just a few clicks and use Claude in runtime to summarize the conversation, generate bot responses on the fly, and more.

The new native integration now lets you put Claude to work with even greater advantages. You can enjoy optimized speed and performance while maintaining centralized orchestration and management of multiple models within the LLM Resource. Likewise, this native support makes it easy to harness new LLM features and capabilities inside Cognigy.AI further down the road.

Enhance Agent Efficiency through User Inactivity Detection

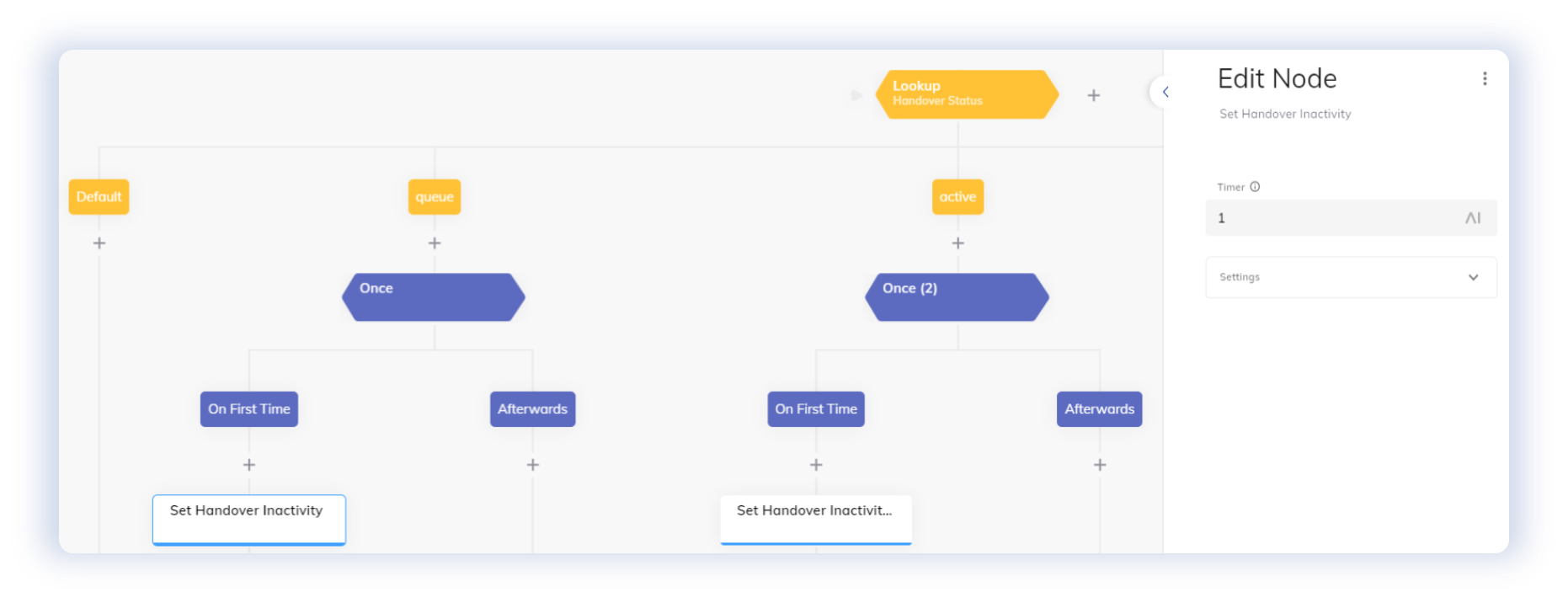

In addition to our long list of fast-evolving LLM features, Cognigy.AI also introduces a slew of other contact center-focused improvements. One highlight in v4.53 is the User Inactivity Detection feature.

If you work in the contact center space, you might know that it isn’t uncommon that a customer drops off within the conversation for multiple reasons. On text and digital channels, this often happens without service agents’ knowledge, resulting in wasted resources spent on abandoned interactions.

But that is about to change! With our latest release, we’ve added a new Node called Set Handover Inactivity. It allows you to specify customer inactivity timeout after a handover. Once a timeout is detected, you can automatically trigger a follow-up message. If timeouts happen multiple times, you have the option to automatically close the dialogue.

Effectively identifying and resolving abandoned conversations allows you to free up service agents’ time for meaningful interactions, improving productivity and engagement.

For a step-by-step setup guide, refer to our documentation.

Other Improvements for Cognigy.AI

Cognigy Virtual Agents

- Added the capability to test an LLM connection

- Added the warning indicator when no model configured for Generative AI use cases

- Updated German translations in the Cognigy UI

- Improved the Microsoft Azure OpenAI Connection type in the LLM settings

- Improved by updating demo webchat to v2.54.0

- Added the Mask IP Address toggle to the Data Protection Endpoint editor settings. This setting is available for most of the Endpoints

Cognigy Insights

- Added the German translations for new features in the Insights UI

Cognigy Live Agent

- Added the ability to create scoped canned responses at the personal, team, and global levels

- Improved by saving team names with the initial formatting

- Improved by allowing conversations to be unassigned from busy agents. To change this behavior, use the Assign conversation to a busy agent setting

- Added support for working with Sentry using the new environment variable

SENTRY_DSN

Cognigy Voice Gateway

- Added the Call Tracing feature to the Recent calls section in the Self-Service Portal

For further information, check out our complete Release Notes here.

.png?width=60&height=60&name=AI%20Copilot%20logo%20(mega%20menu).png)

.png?width=600&height=600&name=Knowledge%20AI%20Feature%20image%20(2).png)