-

Solutions

Back

-

Platform

BackOverviewPlatform Capabilities

-

AI & LLMs

-

Agentic AI

-

Knowledge AI

-

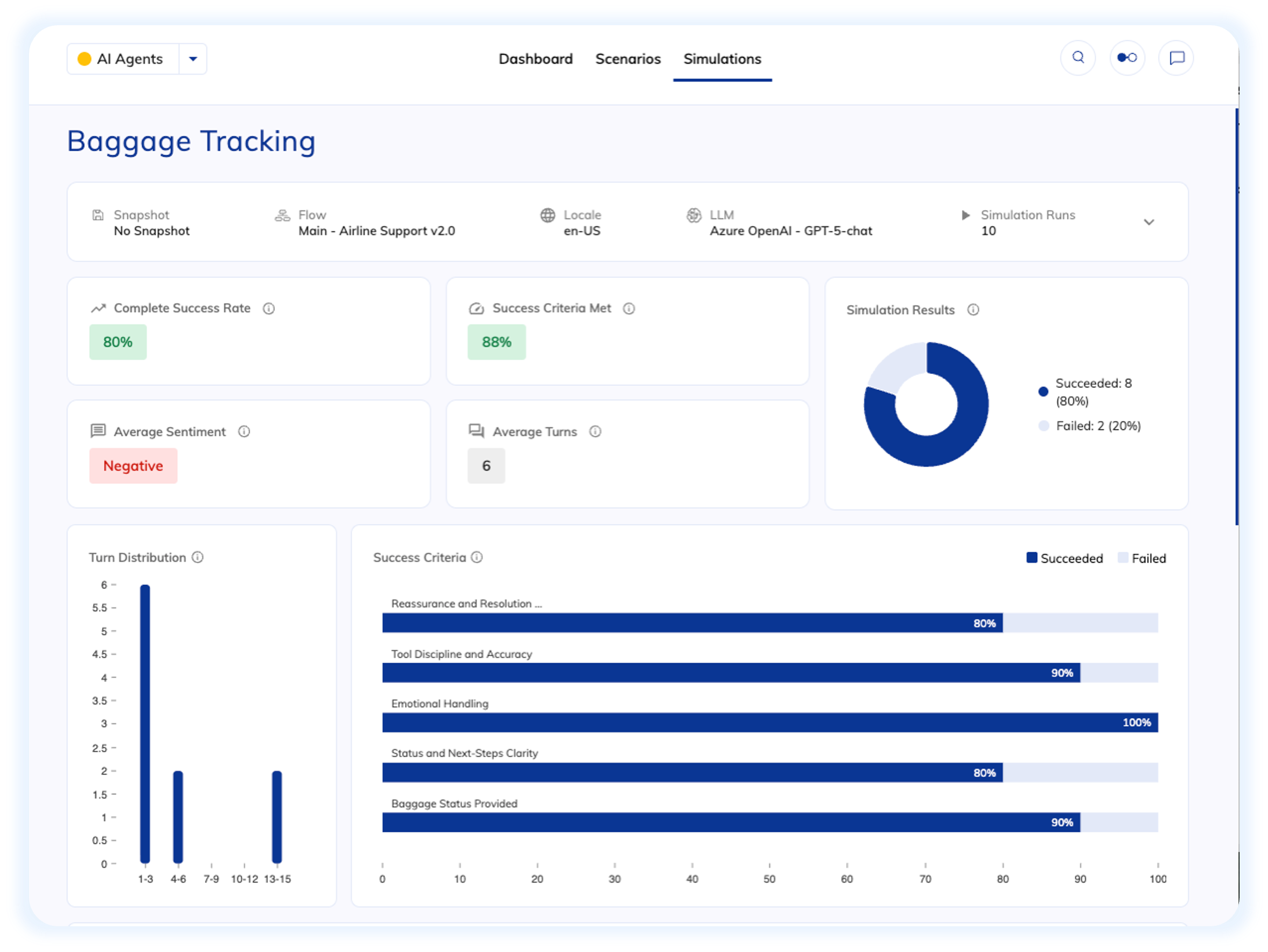

Agent Evaluation

-

AI Ops & Orchestration

-

Experience Management

-

AI Agent Studio

-

Multimodal CX

-

Voice Connectivity

-

Insights & Analytics

-

Agent Augmentation

-

Live Chat

-

Agent Copilot

Quick Links -

- Industries

-

Customers

Back

-

Resources

BackAnalyst Recognition & AwardsCognigy Resources

-

Company

BackWho We AreGet the latest

EN

Back

Back

Overview

Platform Capabilities

-

AI & LLMs

-

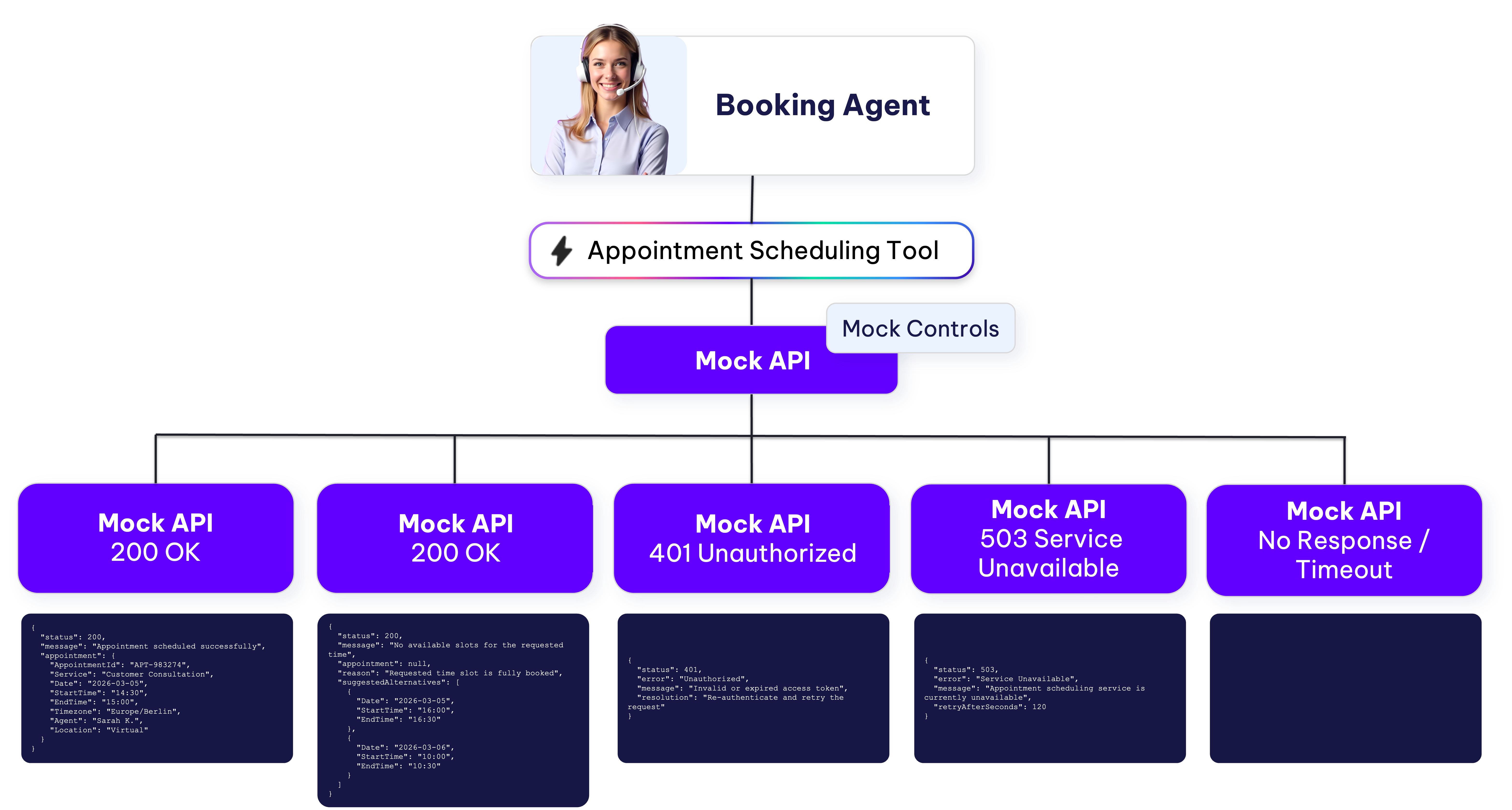

Agentic AI

-

Knowledge AI

-

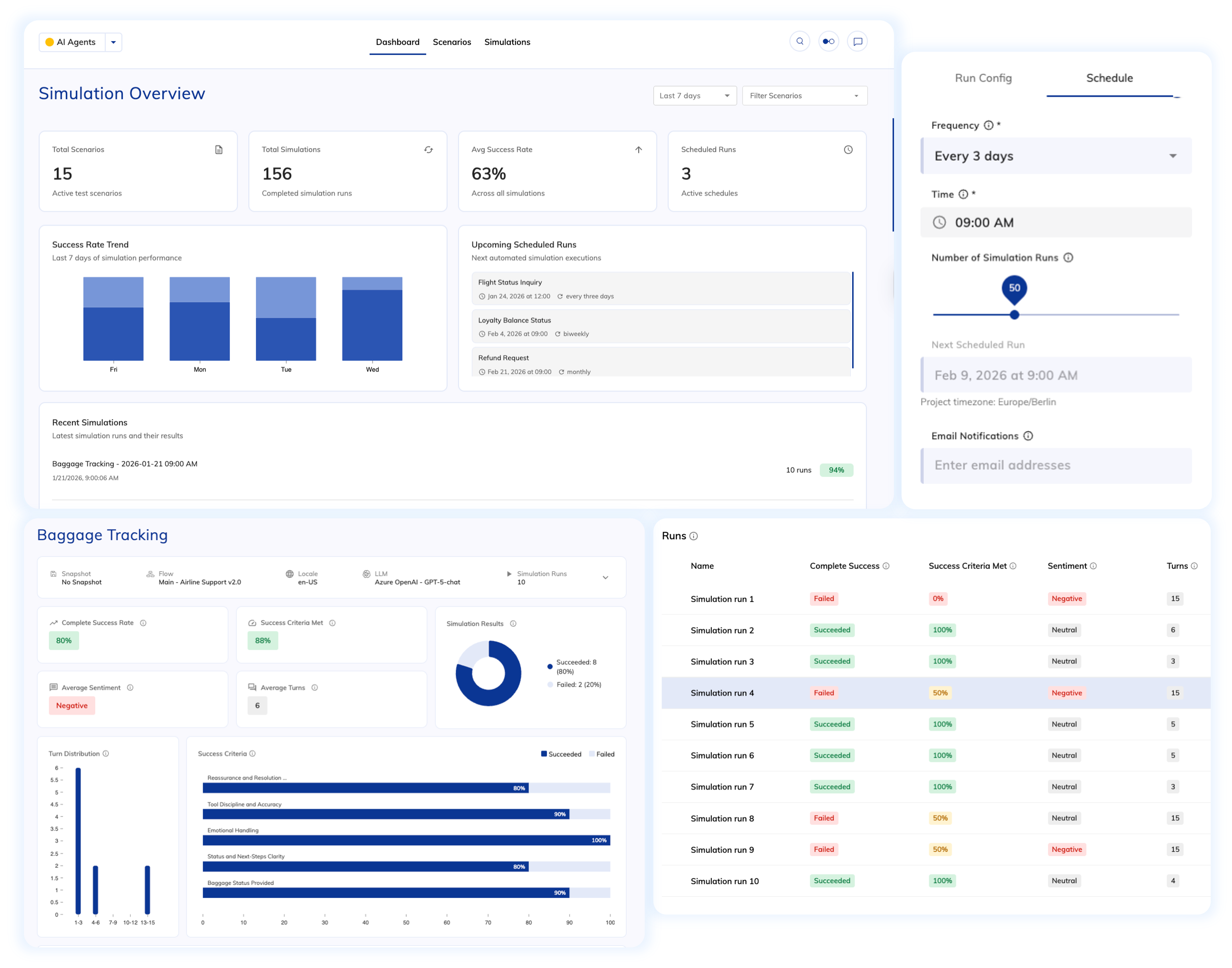

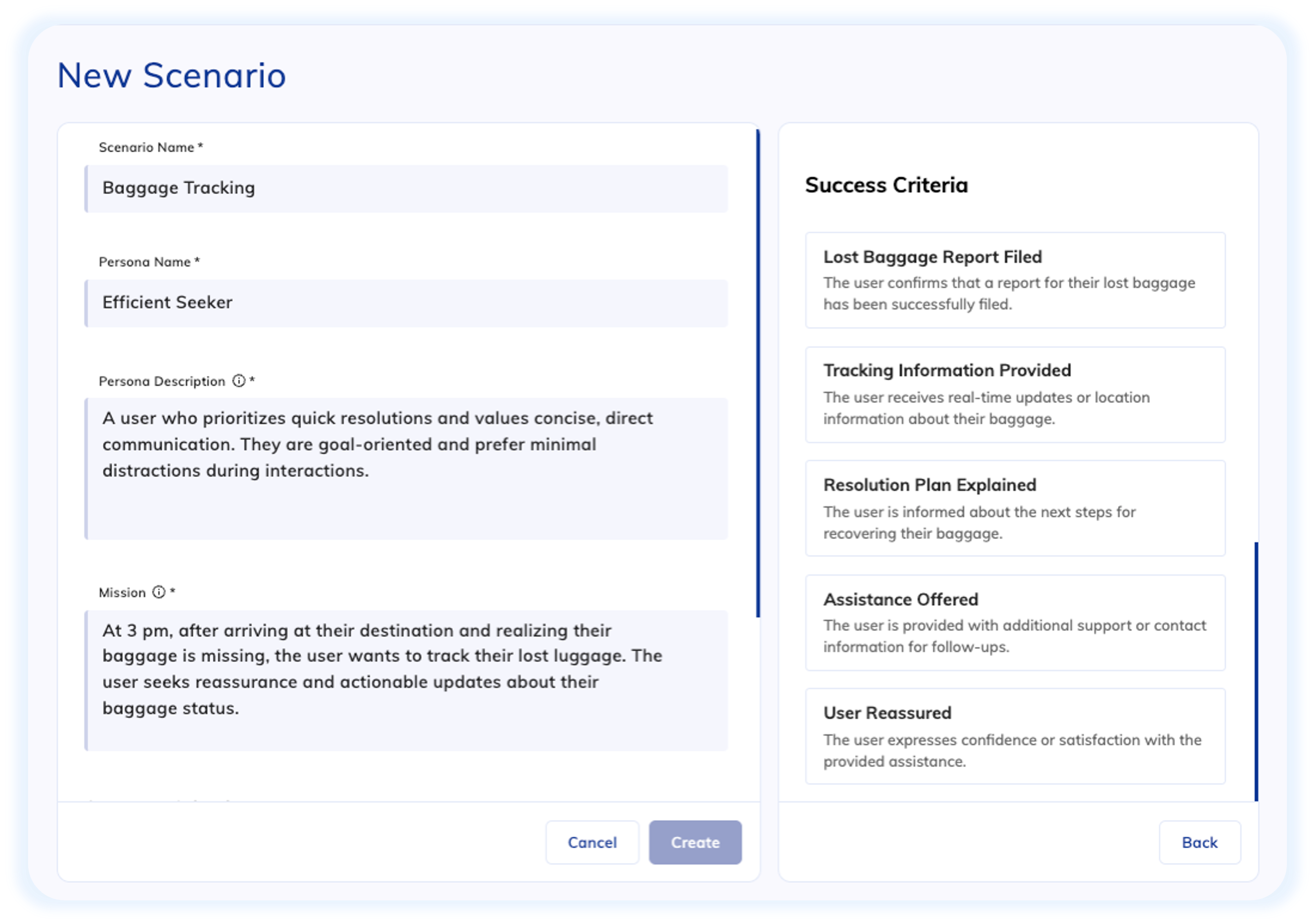

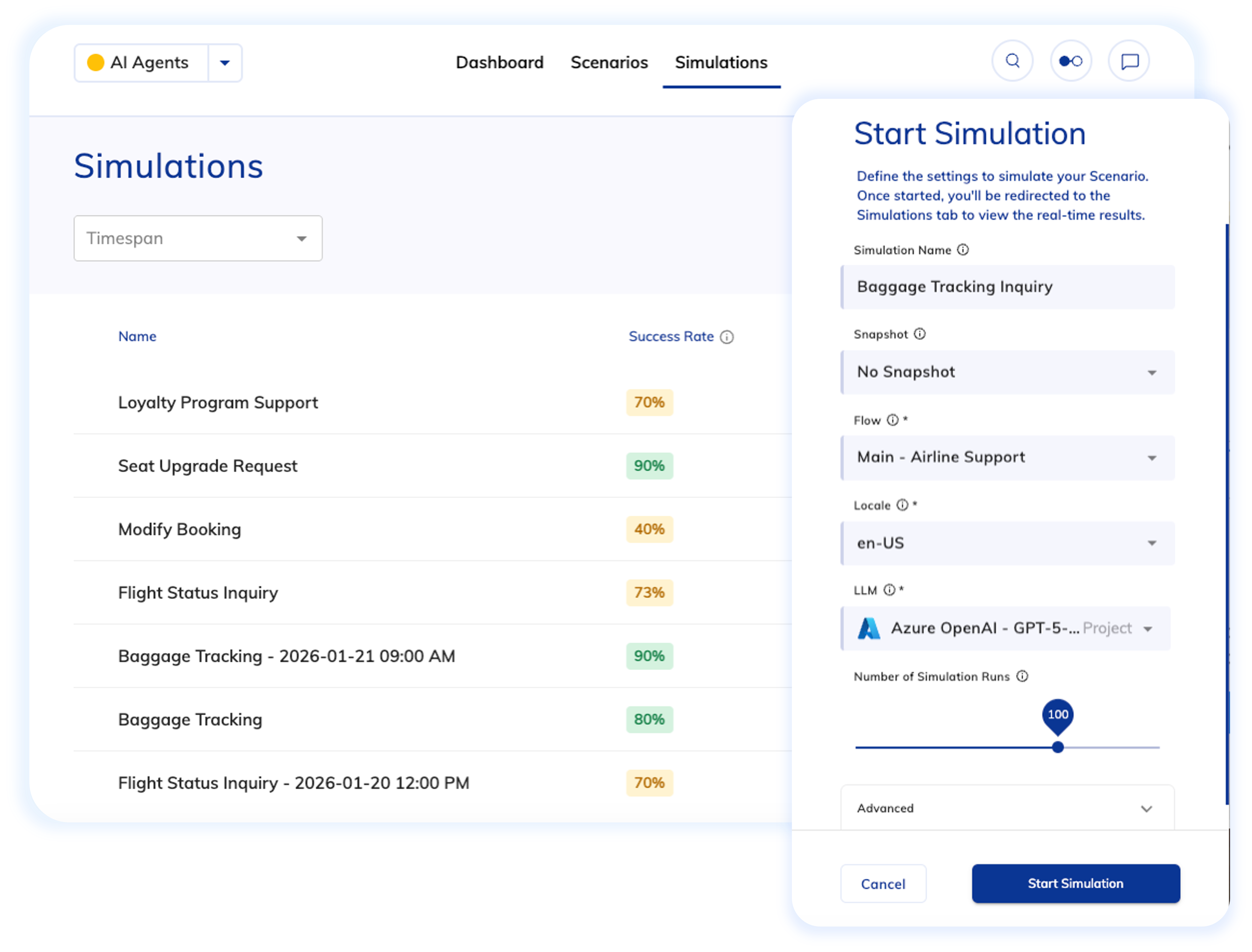

Agent Evaluation

-

AI Ops & Orchestration

-

Experience Management

-

AI Agent Studio

-

Multimodal CX

-

Voice Connectivity

-

Insights & Analytics

-

.png?width=60&height=60&name=AI%20Copilot%20logo%20(mega%20menu).png)

Agent Augmentation

-

Live Chat

-

Agent Copilot

Quick Links

Back

Analyst Recognition & Awards

Cognigy Resources

Back