Summary: Imagine interacting with an AI that doesn’t just answer your questions, but also remembers important details about you, adapting over time to become more helpful, intuitive, and tailored to your needs.

In this article, Sascha Wolter, Principal AI Advocate & Advisor at NiCE Cognigy, explores the essence of memory in AI Agents and how you can implement it for enterprise use cases with Cognigy.AI.

A New Frontier in Hyper-Personalized Assistance

The idea of memory in an AI context involves the capability of retaining information from past interactions, which allows the agent to build a more personalized relationship with the user.

Unlike traditional AI models, which treat every interaction independently, an AI Agent with memory can remember your preferences, context, and unique circumstances. This capability can improve user experience dramatically, as the AI can recall your past conversations, favorite topics, or even specific instructions you've given. For example, an AI that remembers your favorite restaurants or your go-to workout schedule can provide more personalized suggestions without requiring you to input the same information over and over again.

Creating memories for AI Agents is more than just storing data – it involves striking a careful balance between usefulness, privacy, and trust. The challenge lies in developing a system where users feel secure sharing information, knowing that it’s used only to enhance their experience.

Transparency about how data is stored and used, alongside the ability for users to edit or delete memories, is crucial to building this trust. This opens up exciting possibilities, not just in customer service or virtual assistance, but in areas like healthcare, education, and mental health, where AI that truly "knows" you (hyper-personalization) can make a real difference.

Current State and Technology

OpenAI's ChatGPT and Google's Gemini are two prominent AI systems that have integrated memory capabilities to enhance user experience. Both systems allow users to ask the AI to remember specific details resulting in a more personalized interaction over time.

Both systems can remember user preferences for meeting notes, such as including headlines, bullet points, and action items. They can also recall specific details about your business, like owning a neighborhood coffee shop, to provide more relevant messaging suggestions. And they can remember personal details such as your favorite foods, which helps tailor recommendations, like suggesting restaurants more precisely.

In both systems, memories are stored as a list of statements that become part of the conversation context. It's like adding relevant information to your chat manually, but here it's done automatically. When the AI thinks something is worth remembering, it uses an internal tool to summarize and store the memory. Depending on the configuration, this memory is then saved in a list and used in future conversations.

These stored memories allow the AI to understand user needs better and provide more relevant responses, similar to how knowledge is built and utilized in human interactions. By leveraging these memories, the AI can add depth and personalization to its suggestions, enhancing its role as a helpful assistant in various aspects of users' lives.

Rebuilding and Using Memory for Enterprises

There are two types of memory for AI Agents in Cognigy.AI: long-term and short-term. They both integrate data into the context of your conversation in a technically similar way. The main difference is that Long-Term Memory leverages the user's contact profile information, making it available across multiple conversations, sessions, and AI Agents, while Short-Term Memory focuses on a single conversation, for example to track a selection (i.e., selected plan) or the current topic (i.e., claiming an incident).

Long-Term Memory

In Cognigy.AI, Long-Term Memory is stored as part of the user's contact profile, giving you full control and the ability to provide users with the tools they need to manage their information. While this article does not cover legal aspects such as the General Data Protection Regulation (GDPR) or the AI Act, it is important to consider the relevant requirements for such compliance.

Contact Profile & Memory

You can access the current user profile through the interaction panel and manage all user profiles in the Contact Profiles view. In the contact profiles view, you can also edit or delete profile details. Additionally, there is an API available for managing all of these actions.

Long-Term Memory Injection

To incorporate the user-related memories into the current conversation, you can either access the user profile directly or inject the required aspects through the AI Agent Node in the Memory Handling section as Long-Term Memory. You can also adjust memory usage settings both in the AI Agent and in the AI Agent Node.

Although using memory is easy, one question remains: How do you add, update, and delete memories?

Add Memory

The simplest way to add memories is by using the Add Memory Node, which allows you to store any text in the user's memory. Once stored, it is immediately available via the Long-Term Memory of your agent.

Add Memory Tool

Add Memory Tool

To automate memory creation, you can use an AI Agent Tool Action. An add_memory action can be described as follows:

This tool stores user-provided information such as facts, preferences (e.g., "I want", "I like"), events, hobbies, or specific requests (e.g., "vegan"). It captures key details shared by the user. For instance, if the user says, "I love science fiction," it stores: "User enjoys science fiction as a genre."

Additionally, a memory parameter is required, such as:

User-related fact or memory in one sentence.The value for the memory parameter is generated by the Large Language Model as outlined. This parameter can then be utilized in the Add Memory Node to update the memory with a new value:

Add Memory Node

This short CognigyScript retrieves the memory generated by the generative language model to be added to the user contact profile.

Tools, Parameters, and Prompt Engineering:

The "magic" of this process lies in leveraging the Large Language Model (LLM) and the applied prompt engineering. The model works by interpreting user input and attempting to match it to a tool action based on its description, filling in the defined parameters as specified. Sometimes the model can accomplish this on its own, if not, a hint like "Remember this." can do the trick. However, if this does not work as expected, you can refine the descriptions or provide additional instructions to the AI Agent Node according to your use case to improve the results. The details may vary depending on the model used and the total set of descriptions and instructions and their possible interactions.

Update Memory

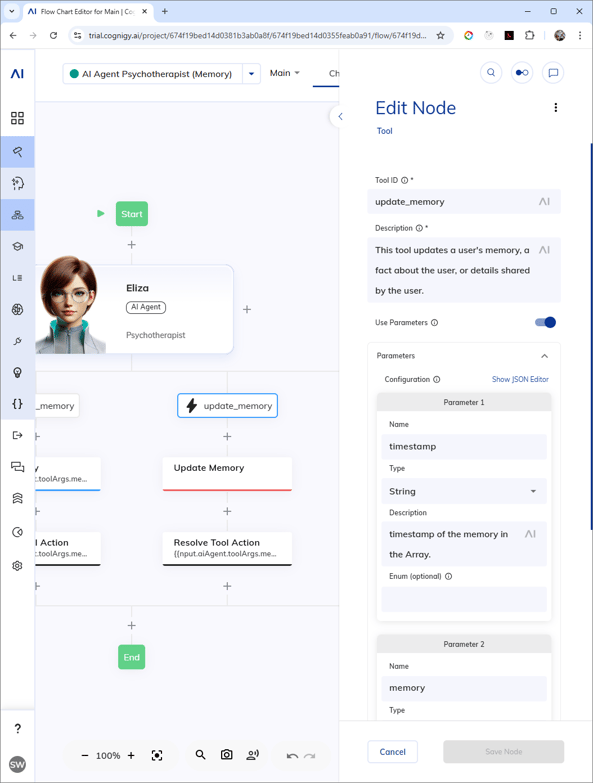

Update Memory Tool

An update_memory action works very similar to the add_message action, its description is:

This tool updates a user's memory, a fact about the user, or details shared by the user.

In addition to the memory parameter (used for updating memory), a timestamp parameter is required to identify the specific old memory to be updated. Since memories are stored with their associated creation date (timestamp), the LLM can retrieve this detail from the context and automatically populate it in the corresponding parameter.

The update itself is now just a small script:

// The profile memories have to be changed on the Array level (here using lodash)const memories = _.clone(profile.memories);const memory = _.find(memories, function(item) { return item.timestamp === input.aiAgent.toolArgs.timestamp});memory.text = input.aiAgent.toolArgs.memory;profile.memories = memories;

Delete Memory

Delete Memory Tool

A delete_memory action is even easier. The description only changes from update to delete:

This tool deletes or forgets a user's memory, a fact about the user, or details shared by the user.

And the timestamp is the only needed parameter to identify the memory to be deleted through the following code in a Code Node:

// The profile memories have to be changed on the Array level (here using lodash)const memories = _.clone(profile.memories);profile.memories = _.filter(memories, function(item) {return item.timestamp !== input.aiAgent.toolArgs.timestamp});

Short-Term Memory

In addition to Long-Term Memory that persists across sessions, you can inject Short-Term Memory into an AI Agent for any specific conversation.

A powerful use case for Short-term Memory is supplying essential knowledge needed for a particular Agent Job. Modern models, such as OpenAI’s GPTs, Google’s Gemini models, and others, now support massive context windows, reaching hundreds of thousands or even millions of tokens. This means you can load entire documents, databases, or manuals directly into an agent’s memory, eliminating the complexity and fragility of traditional retrieval methods for smaller datasets.

For compact data sets, Short-Term Memory is a fast, maintenance-light alternative to RAG, enabling quicker implementation and simpler management. The content can also be dynamic: you can reference external sources, connect to transactional services, or inject real-time data using CognigyScript (JavaScript).

Best of all, you can combine Short-Term Memory with RAG to get the best of both worlds in more complex scenarios.

Short-Term Memory Injection

Wrapping Up

The future of AI is not just about intelligence but also about the depth of connection it can foster. Memories for AI Agents represent a step toward a more nuanced, human-like interaction – one where the agent not only processes information but also understands, learns, and grows alongside you.

So, what will you build to make your AI Agents more memorable?

.png?width=60&height=60&name=AI%20Copilot%20logo%20(mega%20menu).png)

.png?length=370&name=Simulator_Launch%20Blog%20(1).png)