Cognigy.AI 2025.17 delivers meaningful updates for voice and agentic experiences, including new options for speech data residency, more flexible embedding support, and enhanced AI Agent's context.

Enhanced Compliance and Lower Latency for EU-Based Voice Experiences

A key highlight of this release is support for ElevenLabs and Deepgram’s EU-hosted speech services. With this option, all audio data is processed within the EU, facilitating compliance with stringent EU data residency policies and offering peace of mind for privacy-conscious enterprises.

In addition to compliance, regional data handling significantly reduces speech latency, enabling faster, more natural voice interactions for end users. You can activate this feature directly in the Voice Gateway Self-Service Portal under speech services settings.

Note: Support for Deepgram's EU endpoint is available on demand. Please contact us for activation after you have received access from Deepgram.

Flexible Embedding Model Selection for Knowledge AI

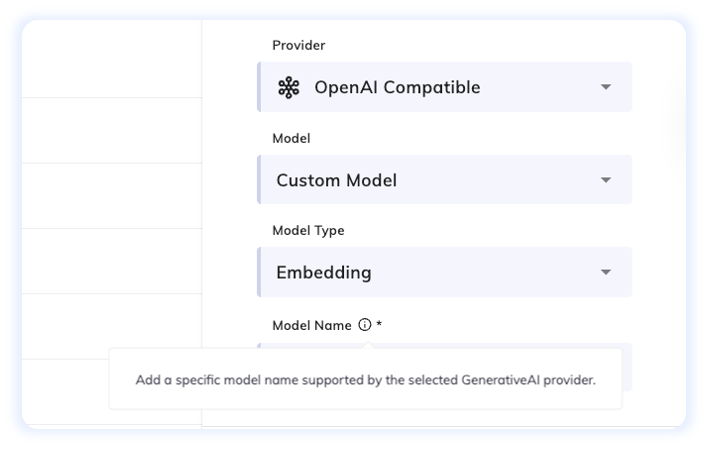

Expanding on our universal connector for OpenAI-compatible chat and completion models, the latest release introduces support for OpenAI-compatible embedding models.

This gives enterprise teams the freedom to integrate their preferred embedding models into Knowledge AI for knowledge ingestion. The result: optimized semantic search behavior, improved content matching accuracy, and seamless alignment with your chosen AI stack.

Rich Media Context Handling for LLM-Driven AI Agents

With Cognigy, you can deliver seamless interactions that combine generative responses with structured UI elements. To further enhance these experiences, this release introduces a more robust approach to handling rich media context in LLM-driven Agents.

A new Include Rich Media Context option is now available in the AI Agent, LLM Prompt, and Get Transcript Nodes. This option passes structured content, such as button titles, quick reply text, and image alt text, or a predefined Textual Description into the AI Agent’s context (i.e., transcript and LLM prompts).

It ensures the AI Agent is fully aware of the accompanying rich media elements, resulting in responses that are fully aligned and accurate with better contextual grounding.

Other Improvements:

Cognigy.AI

- Added a legacy tag to ElevenLabs legacy voice names. ElevenLabs legacy voices aren't available when using the ElevenLabs EU endpoint

- Optimized how Contact Profile data is fetched for the RingCentral Engage handover provider while sending user messages and ensured the data is present when the handover starts

- Added the Enable User Connects Message and the Enable User Disconnects Message options to the Salesforce MIAW in the Handover to Agent Node. These options allow a message to be sent to the human agent in Salesforce MIAW when the user reconnects to or disconnects from the conversation

- Added logic to prevent the end of Salesforce MIAW handovers when the human agent is removed from the conversation. With this logic, you no longer need to set the

DISABLE_ABORT_HANDOVER_ON_SALESFORCE_MIAW_PARTICIPANT_CHANGEfeature flag - Added OAuth 2.0 support for the Azure OpenAI LLM provider

Cognigy Live Agent

- Added the capability to use an existing Persistent Volume Claim (PVC) for storage. Now, users who have an on-premises installation and a volume claim in Microsoft Azure or AWS Elastic Kubernetes Service can set the PVC in

existingClaiminvalues.yaml

For further information, check out our complete Release Notes here.

.png?width=60&height=60&name=AI%20Copilot%20logo%20(mega%20menu).png)