Amazon recently introduced APL (Alexa Presentation Language). APL enables developers to build interactive and multimodal Skills that support screens. Thanks to the generic Alexa directive support built in to Cognigy, you can already leverage this new capability.

All you need to do is:

- Activate the Alexa Presentation Language interface.

- Create visuals for your Alexa Skill.

- Add the APL RenderDocument Skill directive to your conversational responses.

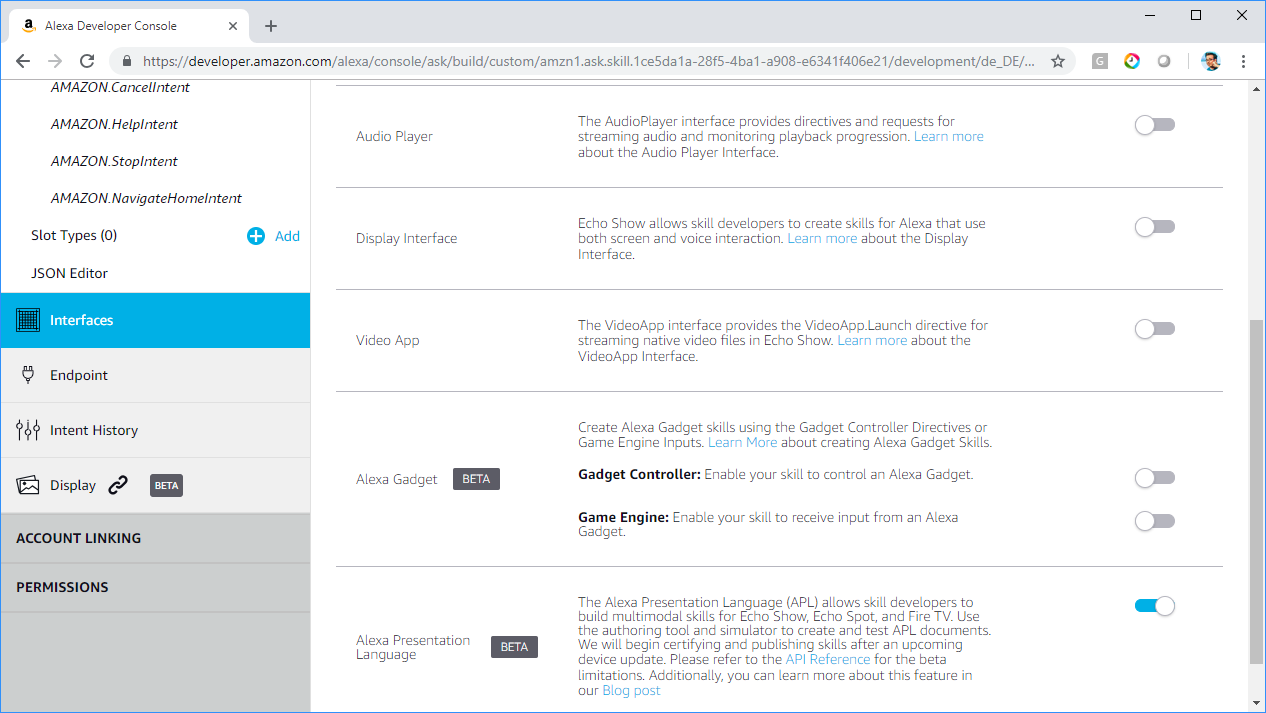

Enabled APL Interface in the Alexa developer console

To activate this feature, go to the Alexa developer console, select your Skill and enable the Alexa Presentation Language in the Interfaces section.

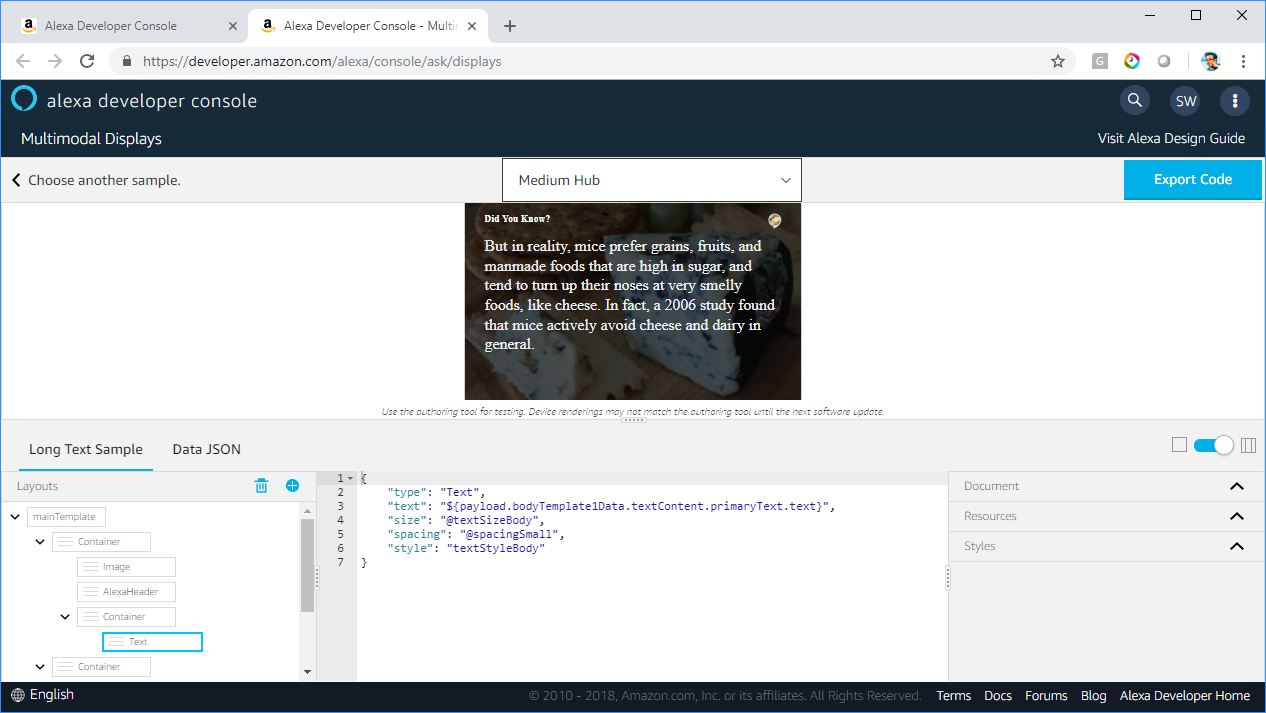

Amazon’s APL authoring tool

Next, create the visuals using APL. APL is a JSON-language (JavaScript Object Notation) and can be written manually as described in the specifications. It is easier and less error-prone to start with the APL Authoring Tool provided by Amazon.

There you can create visuals (using a sample template or starting from scratch), export the final document, and copy its “document” and “datasources” properties for your Alexa response. The JSON structure for the APL RenderDocument Skill directive also requires a type and a custom token.

The JSON looks roughly like this:

{

"type": "Alexa.Presentation.APL.RenderDocument",

"token": "anydocument",

"document": {

…

},

"datasources": {

…

}

}

Alexa directive in a Cognigy Say Node

The last step is to create a Say-Node in Cognigy, select the Alexa tab, and add the JSON document as a new directive.

You also can send APL commands to already-rendered APL documents using the Alexa.Presentation.APL.ExecuteCommands directive. To improve this further, you can distinguish between devices with and without APL support by checking for the Alexa.Presentation.APL property in your conversation flow (ci.data.context.System.device.supportedInterfaces).

You can also handle user events which are sent to your Skill. To do this, check the Alexa.Presentation.APL.UserEvent request type which can be found under ci.data.request.type.

Start Now

See our Alexa quick start video and start your Alexa Skill now using the free Community Edition. We can't wait to see what you will build.

Written by Sascha Wolter

Sascha Wolter is a professional developer and user experience enthusiast. His true passion is to improve the human-computer interaction:

He loves to build conversational and multimodal experiences with text (chatbots) and voice (aka Alexa) and, as such, he works as Senior UX Consultant and Principal Technology Evangelist at Cognigy.

.png?width=60&height=60&name=AI%20Copilot%20logo%20(mega%20menu).png)